A groundbreaking MIT study has revealed a critical flaw in vision-language models (VLMs) that could have serious implications for their use in healthcare and other high-stakes environments.

In the study, MIT researchers found that VLMs are extremely likely to make mistakes in real-world situations because they don't understand negation — words like "no" and "doesn't" that specify what is false or absent. "Those negation words can have a very significant impact, and if we are just using these models blindly, we may run into catastrophic consequences," says Kumail Alhamoud, an MIT graduate student and lead author of the study.

The researchers illustrate the problem with a medical example: Imagine a radiologist examining a chest X-ray and noticing a patient has swelling in tissue but does not have an enlarged heart. In such a scenario, vision-language models would likely fail to distinguish between these conditions. If the model mistakenly identifies reports with both conditions, the diagnosis implications could be significant: a patient with tissue swelling and an enlarged heart likely has a cardiac-related condition, but without an enlarged heart, there could be several different underlying causes.

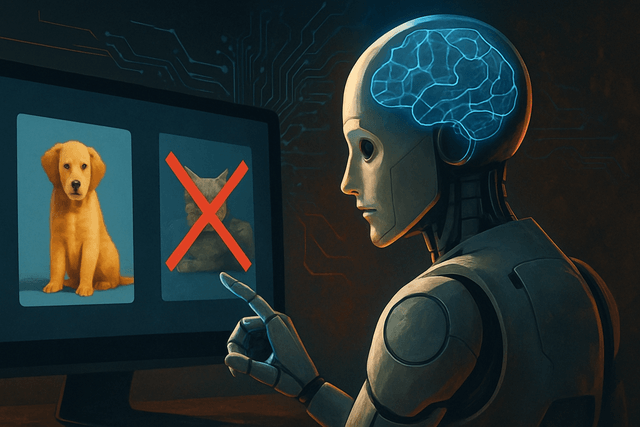

When testing the ability of vision-language models to identify negation in image captions, the researchers found the models often performed as well as a random guess. Building on these findings, the team created a dataset of images with corresponding captions that include negation words describing missing objects. They showed that retraining a vision-language model with this dataset leads to performance improvements when a model is asked to retrieve images that do not contain certain objects. It also boosts accuracy on multiple choice question answering with negated captions. However, the researchers caution that more work is needed to address the root causes of this problem.

"This does not just happen for words like 'no' and 'not.' Regardless of how you express negation or exclusion, the models will simply ignore it," Alhamoud says. This was consistent across every VLM they tested. The underlying issue stems from how these models are trained. "The captions express what is in the images — they are a positive label. And that is actually the whole problem. No one looks at an image of a dog jumping over a fence and captions it by saying 'a dog jumping over a fence, with no helicopters,'" explains senior author Marzyeh Ghassemi. Because the image-caption datasets don't contain examples of negation, VLMs never learn to identify it.

"If something as fundamental as negation is broken, we shouldn't be using large vision/language models in many of the ways we are using them now — without intensive evaluation," says Ghassemi, an associate professor in the Department of Electrical Engineering and Computer Science and a member of the Institute of Medical Engineering Sciences. The research, which will be presented at the Conference on Computer Vision and Pattern Recognition, was conducted by a team including researchers from MIT, OpenAI, and Oxford University.

This finding has significant implications for high-stakes domains like safety monitoring and healthcare. The researchers' work, which includes the development of NegBench, a comprehensive benchmark for evaluating vision-language models on negation-specific tasks, represents an important step toward more robust AI systems capable of nuanced language understanding, with critical implications for medical diagnostics and semantic content retrieval.