A team of researchers led by Boyuan Chen at Duke University has created a revolutionary framework called WildFusion that gives robots human-like perception abilities to navigate difficult outdoor environments.

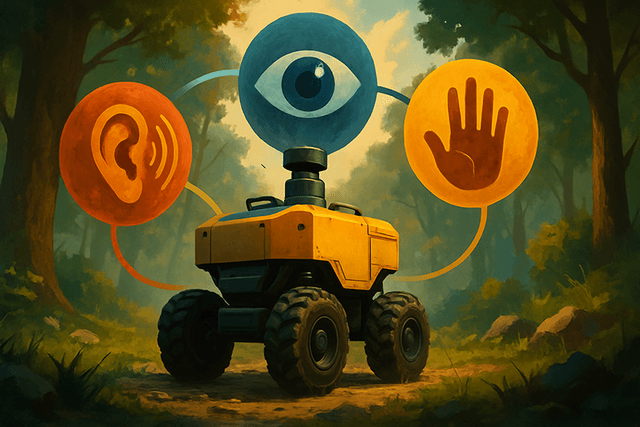

Unlike conventional robots that rely solely on visual data from cameras or LiDAR, WildFusion equips a quadruped robot with additional senses of touch and vibration. This multi-sensory approach allows the robot to build richer environmental maps and make better decisions about safe paths through challenging terrain.

"WildFusion opens a new chapter in robotic navigation and 3D mapping," said Boyuan Chen, Assistant Professor at Duke University. "It helps robots to operate more confidently in unstructured, unpredictable environments like forests, disaster zones, and off-road terrain."

The system works by integrating data from multiple sensors. Contact microphones record vibrations from each step, distinguishing between surfaces like crunchy leaves or soft mud. Tactile sensors measure foot pressure to detect stability, while inertial sensors track the robot's balance. All this information is processed through specialized neural encoders and fused into a comprehensive environmental model.

At the heart of WildFusion is a deep learning architecture that represents the environment as a continuous mathematical field rather than disconnected points. This allows the robot to "fill in the blanks" when sensor data is incomplete, similar to how humans intuitively navigate through partial information.

The technology was successfully tested at Eno River State Park in North Carolina, where the robot confidently navigated dense forests, grasslands, and gravel paths. "These real-world tests proved WildFusion's remarkable ability to accurately predict traversability," noted Yanbaihui Liu, the lead student author.

Looking ahead, the team plans to incorporate additional sensors like thermal and humidity detectors to further enhance the robot's environmental awareness. With its modular design, WildFusion has vast potential applications beyond forest trails, including disaster response, environmental monitoring, agriculture, and inspection of remote infrastructure.